[Campus] Generative AI on Campus: KHU's Progress, Gaps, and Future Direction

A student using generative AI on campus

As generative artificial intelligence (AI) tools have become more common on campus, Kyung Hee University (KHU) has taken steps to promote responsible AI usage. Since 2023, KHU has introduced policies aimed at promoting responsible use, but faculty and students say the current efforts still fall short.

They assert the University has been slow to keep up with the rapid changes in AI. Given the growing importance of AI in education, many call for the KHU community to engage in a collaborative dialogue to establish a comprehensive framework for ethical AI usage.

KHU’s First Steps: Guidelines and Monitoring Tools

KHU’s primary policy, the ChatGPT Application Guideline was released in 2023. It promotes a sense of responsibility and emphasizes the precautions of using AI, particularly, ChatGPT.

Photo: KHU (khu.ac.kr)

The guideline includes three parts: an overview of ChatGPT, guidance for instructors, and recommendations for students. The instructor’s guidance urges faculty to clearly define AI use policies in course syllabi. For students, it emphasizes following instructions, fact-checking AI-generated content, and citing sources. The guideline has been implemented on the campus without any subsequent revisions.

Center for Teaching & Learning (CTL) supported the release, saying the policy aimed to reduce bias and misinformation while upholding academic integrity. In response to the establishment of the guideline, CTL highlighted the necessity of the guideline to mitigate adverse effects stemming from bias and errors driven by generative AI. CTL further stated that they anticipate that KHU faculty and students will adhere to the academic standards and principles outlined in the guideline.

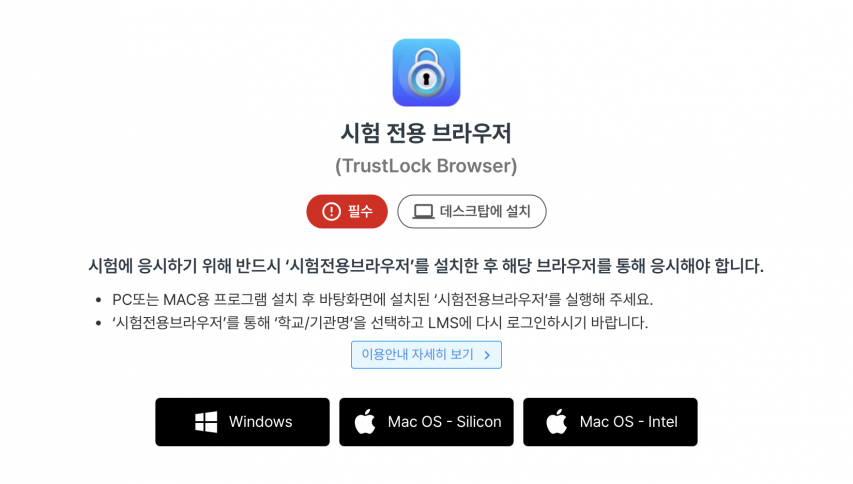

KHU also introduced the TrustLock Browser in April 2025 to prevent cheating on online exams. The program restricts browser functions and blocks other applications during tests.

Photo: KHU (khu.ac.kr)

Faculty Perspectives: A Good Start, But Not Enough

Many professors agree that AI policy is necessary, but they say the current guideline needs an update and clarity. Professor Jin Eun-jin from the Humanitas College said, “The current guideline is too broad and unclear, making it difficult for both instructors and students to apply.” Moreover, she said, “It is outdated and missing key details that were relevant when it was first created but no longer reflects the current reality.”

Prof. Ryu Doo-won from Graduate School of Technology Management, on the other hand, sees the vagueness as intentional. “While a single guideline is uniformly adopted throughout the campus, the approach toward the application varies depending on the discipline and instructor. Therefore, it is challenging to develop a detailed guideline.” Instead of adding more rules, Prof. Ryu proposed a flexible solution: “By incorporating the phrase ‘detailed regulations may vary by instructor’, the University can ensure the autonomy of instructors, which could be a better alternative to making a detailed guideline.”

Prof. Ryu acknowledged that the TrustLock Browser has limitations, calling it “not an absolute solution” and noting that “loopholes exist.” However, he said that the initiative is meaningful, adding, “Although it is an inconvenience for both instructors and students, it makes the act of academic dishonesty itself more difficult.”

Both professors praised the University's efforts to establish AI usage ethics and prevent cheating. However, they emphasized that with AI evolving so quickly, KHU must keep its policies updated and its tools continually improved.

AI in the Class, Coexistence Outweighs Control

As the use of AI in education becomes more common, professors say universities need to change their approach. Instead of trying to control or ban AI, they argue, universities should focus on teaching students how to use it responsibly. Prof. Jin emphasized that universities’ role must shift from how to block it to how to teach students to use it well. She stressed the need for AI literacy education—the ability to use AI’s answers critically rather than accepting them without question.

One proposed solution is the creation of a shared resource platform. Prof. Jin said, “We need a platform where concrete examples, teaching methods, and research materials applicable to education can be shared.” Sungkyunkwan University has already launched a dedicated website to systematically share AI usage methods and ethical guidelines.

Prof. Ryu also extended some crucial advice to students. He cautioned them on the role of AI in their education, explaining that “It is a tool to help express what one already knows. Simply accepting its results does not constitute learning.” He then suggested an ideal method for its use: “Ask questions based on what you have learned, and if any doubts still remain, ask your professor.”

The Way Forward

Despite the University's proactive responses to AI usage in education, it could not keep pace with the rapidly evolving technology. As a result, students are left in a gray zone, unsure of how to use AI responsibly and effectively. With various solutions now being suggested—from regular updates to the guidelines to an expansion of AI literacy education— the University stands at a pivotal moment, where it must make a definitive decision on the proper use of emerging technologies.

There are no registered comments.

I agree to the collection of personal information. [view]